Aug 07, 2018 To enable GLM software to integrate with your SAM monitors, you'll need the Genelec network adaptor kit (8300-601) – which includes the GLM network adaptor, 8300A calibration microphone and microphone holder, 1.8 m USB cable and Quick Connection Guide. The network adaptor connects to your Mac or PC via the supplied USB cable, and to your SAM monitors via an 5 metre Ethernet cable (which is. Mac OS X 10.15 (Catalina) users can follow the method below to use GLM 3 at the moment. Installing GLM 3 in Catalina computer.pdf. GLM 3 software calibrates and controls Genelec SAM monitors and subwoofers using Genelec Network Adapter model 8300-416.

In this chapter we'll set up your environment for developing Vulkan applicationsand install some useful libraries. All of the tools we'll use, with theexception of the compiler, are compatible with Windows, Linux and MacOS, but thesteps for installing them differ a bit, which is why they're describedseparately here.

Choose 'Command Line Tool' under the Application template for Mac OS X; Choose type 'C' Enter your desired project name and directory and click create; In the 'Linked Frameworks and Libraries' area click the '+' button, and select 'OpenGL.framework' Repeat for 'GLUT.framework'. In this episode, I show you how to install the new Visual Studio 2019 and get it working for C programming. #C⭐ Kite is a free AI-powered coding assista.

Windows

If you're developing for Windows, then I will assume that you are using VisualStudio to compile your code. For complete C++17 support, you need to use eitherVisual Studio 2017 or 2019. The steps outlined below were written for VS 2017.

Vulkan SDK

The most important component you'll need for developing Vulkan applications isthe SDK. It includes the headers, standard validation layers, debugging toolsand a loader for the Vulkan functions. The loader looks up the functions in thedriver at runtime, similarly to GLEW for OpenGL - if you're familiar with that.

The SDK can be downloaded from the LunarG websiteusing the buttons at the bottom of the page. You don't have to create anaccount, but it will give you access to some additional documentation that maybe useful to you.

Proceed through the installation and pay attention to the install location ofthe SDK. The first thing we'll do is verify that your graphics card and driverproperly support Vulkan. Go to the directory where you installed the SDK, openthe Bin directory and run the vkcube.exe demo. You should see the following:

If you receive an error message then ensure that your drivers are up-to-date,include the Vulkan runtime and that your graphics card is supported. See theintroduction chapter for links to drivers from the majorvendors.

There is another program in this directory that will be useful for development. The glslangValidator.exe and glslc.exe programs will be used to compile shaders from thehuman-readable GLSL tobytecode. We'll cover this in depth in the shader moduleschapter. The Bin directory also contains the binaries of the Vulkan loaderand the validation layers, while the Lib directory contains the libraries.

Lastly, there's the Include directory that contains the Vulkan headers. Feel free to explore the other files, but we won't need them for this tutorial.

GLFW

As mentioned before, Vulkan by itself is a platform agnostic API and does notinclude tools for creating a window to display the rendered results. To benefitfrom the cross-platform advantages of Vulkan and to avoid the horrors of Win32,we'll use the GLFW library to create a window, whichsupports Windows, Linux and MacOS. There are other libraries available for thispurpose, like SDL, but the advantage of GLFW is thatit also abstracts away some of the other platform-specific things in Vulkanbesides just window creation.

You can find the latest release of GLFW on the official website.In this tutorial we'll be using the 64-bit binaries, but you can of course alsochoose to build in 32 bit mode. In that case make sure to link with the VulkanSDK binaries in the Lib32 directory instead of Lib. After downloading it, extract the archiveto a convenient location. I've chosen to create a Libraries directory in theVisual Studio directory under documents.

GLM

Unlike DirectX 12, Vulkan does not include a library for linear algebraoperations, so we'll have to download one. GLM is anice library that is designed for use with graphics APIs and is also commonlyused with OpenGL.

GLM is a header-only library, so just download the latest versionand store it in a convenient location. You should have a directory structuresimilar to the following now:

Setting up Visual Studio

Now that you've installed all of the dependencies we can set up a basic VisualStudio project for Vulkan and write a little bit of code to make sure thateverything works.

Start Visual Studio and create a new Windows Desktop Wizard project by entering a name and pressing OK.

Make sure that Console Application (.exe) is selected as application type so that we have a place to print debug messages to, and check Empty Project to prevent Visual Studio from adding boilerplate code.

Press OK to create the project and add a C++ source file. You shouldalready know how to do that, but the steps are included here for completeness.

Now add the following code to the file. Don't worry about trying tounderstand it right now; we're just making sure that you can compile and runVulkan applications. We'll start from scratch in the next chapter.

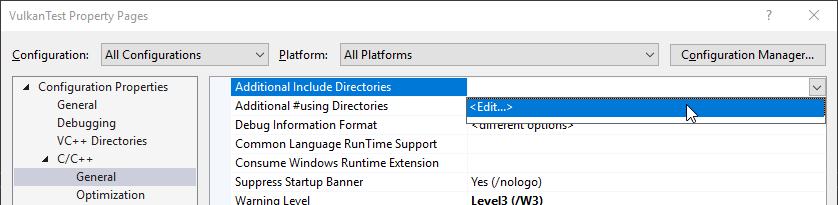

Let's now configure the project to get rid of the errors. Open the projectproperties dialog and ensure that All Configurations is selected, because mostof the settings apply to both Debug and Release mode.

Go to C++ -> General -> Additional Include Directories and press in the dropdown box.

Add the header directories for Vulkan, GLFW and GLM:

Mail ru agent. Next, open the editor for library directories under Linker -> General:

And add the locations of the object files for Vulkan and GLFW:

Go to Linker -> Input and press in the Additional Dependenciesdropdown box.

Enter the names of the Vulkan and GLFW object files:

And finally change the compiler to support C++17 features:

You can now close the project properties dialog. If you did everything rightthen you should no longer see any more errors being highlighted in the code.

Finally, ensure that you are actually compiling in 64 bit mode:

Press F5 to compile and run the project and you should see a command promptand a window pop up like this:

The number of extensions should be non-zero. Congratulations, you're all set forplaying with Vulkan!

Linux

These instructions will be aimed at Ubuntu users, but you may be able to followalong by changing the apt commands to the package manager commands that are appropriate for you. You should have a compiler that supports C++17 (GCC 7+ or Clang 5+). You'll also need make.

Vulkan Packages

The most important components you'll need for developing Vulkan applications on Linux are the Vulkan loader, validation layers, and a couple of command-line utilities to test whether your machine is Vulkan-capable:

sudo apt install vulkan-tools: Command-line utilities, most importantlyvulkaninfoandvkcube. Run these to confirm your machine supports Vulkan.sudo apt install libvulkan-dev: Installs Vulkan loader. The loader looks up the functions in the driver at runtime, similarly to GLEW for OpenGL - if you're familiar with that.sudo apt install vulkan-validationlayers-dev spirv-tools: Installs the standard validation layers and required SPIR-V tools. These are crucial when debugging Vulkan applications, and we'll discuss them in the upcoming chapter.

If installation was successful, you should be all set with the Vulkan portion. Remember to runvkcube and ensure you see the following pop up in a window:

If you receive an error message then ensure that your drivers are up-to-date,include the Vulkan runtime and that your graphics card is supported. See theintroduction chapter for links to drivers from the majorvendors.

GLFW

As mentioned before, Vulkan by itself is a platform agnostic API and does notinclude tools for creation a window to display the rendered results. To benefitfrom the cross-platform advantages of Vulkan and to avoid the horrors of X11,we'll use the GLFW library to create a window, whichsupports Windows, Linux and MacOS. There are other libraries available for thispurpose, like SDL, but the advantage of GLFW is thatit also abstracts away some of the other platform-specific things in Vulkanbesides just window creation.

We'll be installing GLFW from the following command:

GLM

Unlike DirectX 12, Vulkan does not include a library for linear algebraoperations, so we'll have to download one. GLM is anice library that is designed for use with graphics APIs and is also commonlyused with OpenGL.

It is a header-only library that can be installed from the libglm-dev package:

Shader Compiler

We have just about all we need, except we'll want a program to compile shaders from the human-readable GLSL to bytecode.

Two popular shader compilers are Khronos Group's glslangValidator and Google's glslc. The latter has a familiar GCC- and Clang-like usage, so we'll go with that: download Google's unofficial binaries and copy glslc to your /usr/local/bin. Note you may need to sudo depending on your permissions. To test, run glslc and it should rightfully complain we didn't pass any shaders to compile:

glslc: error: no input files

We'll cover glslc in depth in the shader modules chapter.

Setting up a makefile project

Now that you have installed all of the dependencies, we can set up a basicmakefile project for Vulkan and write a little bit of code to make sure thateverything works.

Create a new directory at a convenient location with a name like VulkanTest.Create a source file called main.cpp and insert the following code. Don'tworry about trying to understand it right now; we're just making sure that youcan compile and run Vulkan applications. We'll start from scratch in the nextchapter.

Next, we'll write a makefile to compile and run this basic Vulkan code. Create anew empty file called Makefile. I will assume that you already have some basicexperience with makefiles, like how variables and rules work. If not, you canget up to speed very quickly with this tutorial.

We'll first define a couple of variables to simplify the remainder of the file.Define a CFLAGS variable that will specify the basic compiler flags:

We're going to use modern C++ (-std=c++17), and we'll set optimization level to O2. We can remove -O2 to compile programs faster, but we should remember to place it back for release builds.

Similarly, define the linker flags in a LDFLAGS variable:

Adobe Photoshop CC 2014 free. download full Version Free. The Adobe has released the new version of Adobe Photoshop called CC. The Photoshop or Adobe Photoshop cloud CC (Creative Cloud), dozens of its important differences with previous versions of Photoshop and addition of Mac that provides the opportunities and problems at a speed too high for Adobe programmers there. Adobe Photoshop CC 2014 Crack + Serial Key(mac), Autodesk AutoCAD LT 2009 Full Version Features, Alien Skin Exposure 6 Review, Autodesk AutoCAD 2017 Download Full Version XAMPP 7.4.1-0 (64-Bit). Adobe Premiere Pro CC 2014: 2. Adobe InDesign cc 2014: 3. Adobe Flash CC 2014: 4. Adobe Photoshop CC Serial key: 5. Adobe Photoshop CC 2015 (32 Bit) 6. Adobe Photoshop CC 14.0 Final Multilanguage: 7. Adobe photoshop cc: 8. Adobe photoshop CS4 PSCS4 mac: 9. Adobe Photoshop Elements 4 (Mac) 10. Adobe PhotoShop v3.01 Mac: 11. Adobe Photoshop. Adobe Photoshop CC Crack. Daily2soft.com-Adobe Photoshop CC Crack,For MAC OS Crack is a main photograph altering programming project with numerous propelled devices for capable altering.It is an extremely valuable project for web editors, photograph editors, representation creators and notwithstanding for amateurs.Adobe Systems has discharged the most recent Adobe Photoshop CC 2014 Crack. Photoshop cc 2014 crack for mac cracked.

How To Install Glm For Mac Windows 7

The flag -lglfw is for GLFW, -lvulkan links with the Vulkan function loader and the remaining flags are low-level system libraries that GLFW needs. The remaining flags are dependencies of GLFW itself: the threading and window management.

Specifying the rule to compile VulkanTest is straightforward now. Make sure touse tabs for indentation instead of spaces.

Verify that this rule works by saving the makefile and running make in thedirectory with main.cpp and Makefile. This should result in a VulkanTestexecutable.

We'll now define two more rules, test and clean, where the former willrun the executable and the latter will remove a built executable:

Running make test should show the program running successfully, and displaying the number of Vulkan extensions. The application should exit with the success return code (0) when you close the empty window. You should now have a complete makefile that resembles the following:

You can now use this directory as a template for your Vulkan projects. Make a copy, rename it to something like HelloTriangle and remove all of the code in main.cpp.

You are now all set for the real adventure.

MacOS

These instructions will assume you are using Xcode and the Homebrew package manager. Also, keep in mind that you will need at least MacOS version 10.11, and your device needs to support the Metal API.

Vulkan SDK

The most important component you'll need for developing Vulkan applications is the SDK. It includes the headers, standard validation layers, debugging tools and a loader for the Vulkan functions. The loader looks up the functions in the driver at runtime, similarly to GLEW for OpenGL - if you're familiar with that.

The SDK can be downloaded from the LunarG website using the buttons at the bottom of the page. You don't have to create an account, but it will give you access to some additional documentation that may be useful to you.

The SDK version for MacOS internally uses MoltenVK. There is no native support for Vulkan on MacOS, so what MoltenVK does is actually act as a layer that translates Vulkan API calls to Apple's Metal graphics framework. With this you can take advantage of debugging and performance benefits of Apple's Metal framework.

After downloading it, simply extract the contents to a folder of your choice (keep in mind you will need to reference it when creating your projects on Xcode). Inside the extracted folder, in the Applications folder you should have some executable files that will run a few demos using the SDK. Run the vkcube executable and you will see the following:

GLFW

As mentioned before, Vulkan by itself is a platform agnostic API and does not include tools for creation a window to display the rendered results. We'll use the GLFW library to create a window, which supports Windows, Linux and MacOS. There are other libraries available for this purpose, like SDL, but the advantage of GLFW is that it also abstracts away some of the other platform-specific things in Vulkan besides just window creation.

To install GLFW on MacOS we will use the Homebrew package manager to get the glfw package:

GLM

Vulkan does not include a library for linear algebra operations, so we'll have to download one. GLM is a nice library that is designed for use with graphics APIs and is also commonly used with OpenGL.

It is a header-only library that can be installed from the glm package:

Setting up Xcode

Now that all the dependencies are installed we can set up a basic Xcode project for Vulkan. Most of the instructions here are essentially a lot of 'plumbing' so we can get all the dependencies linked to the project. Also, keep in mind that during the following instructions whenever we mention the folder vulkansdk we are refering to the folder where you extracted the Vulkan SDK.

Start Xcode and create a new Xcode project. On the window that will open select Application > Command Line Tool.

Select Next, write a name for the project and for Language select C++.

Press Next and the project should have been created. Now, let's change the code in the generated main.cpp file to the following code:

Keep in mind you are not required to understand all this code is doing yet, we are just setting up some API calls to make sure everything is working.

Xcode should already be showing some errors such as libraries it cannot find. We will now start configuring the project to get rid of those errors. On the Project Navigator panel select your project. Open the Build Settings tab and then:

- Find the Header Search Paths field and add a link to

/usr/local/include(this is where Homebrew installs headers, so the glm and glfw3 header files should be there) and a link tovulkansdk/macOS/includefor the Vulkan headers. - Find the Library Search Paths field and add a link to

/usr/local/lib(again, this is where Homebrew installs libraries, so the glm and glfw3 lib files should be there) and a link tovulkansdk/macOS/lib.

It should look like so (obviously, paths will be different depending on where you placed on your files):

Now, in the Build Phases tab, on Link Binary With Libraries we will add both the glfw3 and the vulkan frameworks. To make things easier we will be adding the dynamic libraries in the project (you can check the documentation of these libraries if you want to use the static frameworks).

- For glfw open the folder

/usr/local/liband there you will find a file name likelibglfw.3.x.dylib('x' is the library's version number, it might be different depending on when you downloaded the package from Homebrew). Simply drag that file to the Linked Frameworks and Libraries tab on Xcode. - For vulkan, go to

vulkansdk/macOS/lib. Do the same for the file both fileslibvulkan.1.dylibandlibvulkan.1.x.xx.dylib(where 'x' will be the version number of the the SDK you downloaded).

After adding those libraries, in the same tab on Copy Files change Destination to 'Frameworks', clear the subpath and deselect 'Copy only when installing'. Click on the '+' sign and add all those three frameworks here aswell.

Your Xcode configuration should look like:

The last thing you need to setup are a couple of environment variables. On Xcode toolbar go to Product > Scheme > Edit Scheme.., and in the Arguments tab add the two following environment variables:

- VK_ICD_FILENAMES =

vulkansdk/macOS/share/vulkan/icd.d/MoltenVK_icd.json - VK_LAYER_PATH =

vulkansdk/macOS/share/vulkan/explicit_layer.d

It should look like so:

Finally, you should be all set! Now if you run the project (remembering to setting the build configuration to Debug or Release depending on the configuration you chose) you should see the following:

The number of extensions should be non-zero. The other logs are from the libraries, you might get different messages from those depending on your configuration.

You are now all set for the real thing.

Please enable JavaScript to view the comments powered by Disqus.Important:OpenGL was deprecated in macOS 10.14. To create high-performance code on GPUs, use the Metal framework instead. See Metal.

You can tell that Apple has an implementation of OpenGL on its platform by looking at the user interface for many of the applications that are installed with OS X. The reflections built into iChat (Figure 1-1) provide one of the more notable examples. The responsiveness of the windows, the instant results of applying an effect in iPhoto, and many other operations in OS X are due to the use of OpenGL. OpenGL is available to all Macintosh applications.

OpenGL for OS X is implemented as a set of frameworks that contain the OpenGL runtime engine and its drawing software. These frameworks use platform-neutral virtual resources to free your programming as much as possible from the underlying graphics hardware. OS X provides a set of application programming interfaces (APIs) that Cocoa applications can use to support OpenGL drawing.

This chapter provides an overview of OpenGL and the interfaces your application uses on the Mac platform to tap into it.

OpenGL Concepts

To understand how OpenGL fits into OS X and your application, you should first understand how OpenGL is designed.

OpenGL Implements a Client-Server Model

OpenGL uses a client-server model, as shown in Figure 1-2. When your application calls an OpenGL function, it talks to an OpenGL client. The client delivers drawing commands to an OpenGL server. The nature of the client, the server, and the communication path between them is specific to each implementation of OpenGL. For example, the server and clients could be on different computers, or they could be different processes on the same computer.

A client-server model allows the graphics workload to be divided between the client and the server. For example, all Macintosh computers ship with dedicated graphics hardware that is optimized to perform graphics calculations in parallel. Figure 1-3 shows a common arrangement of CPUs and GPUs. With this hardware configuration, the OpenGL client executes on the CPU and the server executes on the GPU.

OpenGL Commands Can Be Executed Asynchronously

Mac How To Install Glm

A benefit of the OpenGL client-server model is that the client can return control to the application before the command has finished executing. An OpenGL client may also buffer or delay execution of OpenGL commands. If OpenGL required all commands to complete before returning control to the application, then either the CPU or the GPU would be idle waiting for the other to provide it data, resulting in reduced performance.

Some OpenGL commands implicitly or explicitly require the client to wait until some or all previously submitted commands have completed. OpenGL applications should be designed to reduce the frequency of client-server synchronizations. See OpenGL Application Design Strategies for more information on how to design your OpenGL application.

OpenGL Commands Are Executed In Order

OpenGL guarantees that commands are executed in the order they are received by OpenGL.

OpenGL Copies Client Data at Call-Time

When an application calls an OpenGL function, the OpenGL client copies any data provided in the parameters before returning control to the application. For example, if a parameter points at an array of vertex data stored in application memory, OpenGL must copy that data before returning. Therefore, an application is free to change memory it owns regardless of calls it makes to OpenGL.

The data that the client copies is often reformatted before it is transmitted to the server. Copying, modifying, and transmitting parameters to the server adds overhead to calling OpenGL. Applications should be designed to minimize copy overhead.

OpenGL Relies on Platform-Specific Libraries For Critical Functionality

OpenGL provides a rich set of cross-platform drawing commands, but does not define functions to interact with an operating system's graphics subsystem. Instead, OpenGL expects each implementation to define an interface to create rendering contexts and associate them with the graphics subsystem. A rendering context holds all of the data stored in the OpenGL state machine. Allowing multiple contexts allows the state in one machine to be changed by an application without affecting other contexts.

Associating OpenGL with the graphic subsystem usually means allowing OpenGL content to be rendered to a specific window. When content is associated with a window, the implementation creates whatever resources are required to allow OpenGL to render and display images.

OpenGL in OS X

OpenGL in OS X implements the OpenGL client-server model using a common OpenGL framework and plug-in drivers. The framework and driver combine to implement the client portion of OpenGL, as shown in Figure 1-4. Dedicated graphics hardware provides the server. Although this is the common scenario, Apple also provides a software renderer implemented entirely on the CPU.

OS X supports a display space that can include multiple dissimilar displays, each driven by different graphics cards with different capabilities. In addition, multiple OpenGL renderers can drive each graphics card. To accommodate this versatility, OpenGL for OS X is segmented into well-defined layers: a window system layer, a framework layer, and a driver layer, as shown in Figure 1-5. This segmentation allows for plug-in interfaces to both the window system layer and the framework layer. Plug-in interfaces offer flexibility in software and hardware configuration without violating the OpenGL standard.

The window system layer is an OS X–specific layer that your application uses to create OpenGL rendering contexts and associate them with the OS X windowing system. The NSOpenGL classes and Core OpenGL (CGL) API also provide some additional controls for how OpenGL operates on that context. See OpenGL APIs Specific to OS X for more information. Finally, this layer also includes the OpenGL libraries—GL, GLU, and GLUT. (See Apple-Implemented OpenGL Libraries for details.)

The common OpenGL framework layer is the software interface to the graphics hardware. This layer contains Apple's implementation of the OpenGL specification.

The driver layer contains the optional GLD plug-in interface and one or more GLD plug-in drivers, which may have different software and hardware support capabilities. The GLD plug-in interface supports third-party plug-in drivers, allowing third-party hardware vendors to provide drivers optimized to take best advantage of their graphics hardware.

Accessing OpenGL Within Your Application

The programming interfaces that your application calls fall into two categories—those specific to the Macintosh platform and those defined by the OpenGL Working Group. The Apple-specific programming interfaces are what Cocoa applications use to communicate with the OS X windowing system. These APIs don't create OpenGL content, they manage content, direct it to a drawing destination, and control various aspects of the rendering operation. Your application calls the OpenGL APIs to create content. OpenGL routines accept vertex, pixel, and texture data and assemble the data to create an image. The final image resides in a framebuffer, which is presented to the user through the windowing-system specific API.

OpenGL APIs Specific to OS X

OS X offers two easy-to-use APIs that are specific to the Macintosh platform: the NSOpenGL classes and the CGL API. Throughout this document, these APIs are referred to as the Apple-specific OpenGL APIs.

Cocoa provides many classes specifically for OpenGL:

The

NSOpenGLContextclass implements a standard OpenGL rendering context.The

NSOpenGLPixelFormatclass is used by an application to specify the parameters used to create the OpenGL context.The

NSOpenGLViewclass is a subclass ofNSViewthat usesNSOpenGLContextandNSOpenGLPixelFormatto display OpenGL content in a view. Applications that subclassNSOpenGLViewdo not need to directly subclassNSOpenGLPixelFormatorNSOpenGLContext. Applications that need customization or flexibility, can subclassNSViewand createNSOpenGLPixelFormatandNSOpenGLContextobjects manually.The

NSOpenGLLayerclass allows your application to integrate OpenGL drawing with Core Animation.The

NSOpenGLPixelBufferclass provides hardware-accelerated offscreen drawing.

The Core OpenGL API (CGL) resides in the OpenGL framework and is used to implement the NSOpenGL classes. CGL offers the most direct access to system functionality and provides the highest level of graphics performance and control for drawing to the full screen. CGL Reference provides a complete description of this API.

Apple-Implemented OpenGL Libraries

OS X also provides the full suite of graphics libraries that are part of every implementation of OpenGL: GL, GLU, GLUT, and GLX. Two of these—GL and GLU—provide low-level drawing support. The other two—GLUT and GLX—support drawing to the screen.

Your application typically interfaces directly with the core OpenGL library (GL), the OpenGL Utility library (GLU), and the OpenGL Utility Toolkit (GLUT). The GL library provides a low-level modular API that allows you to define graphical objects. It supports the core functions defined by the OpenGL specification. It provides support for two fundamental types of graphics primitives: objects defined by sets of vertices, such as line segments and simple polygons, and objects that are pixel-based images, such as filled rectangles and bitmaps. The GL API does not handle complex custom graphical objects; your application must decompose them into simpler geometries.

The GLU library combines functions from the GL library to support more advanced graphics features. It runs on all conforming implementations of OpenGL. GLU is capable of creating and handling complex polygons (including quartic equations), processing nonuniform rational b-spline curves (NURBs), scaling images, and decomposing a surface to a series of polygons (tessellation).

The GLUT library provides a cross-platform API for performing operations associated with the user windowing environment—displaying and redrawing content, handling events, and so on. It is implemented on most UNIX, Linux, and Windows platforms. Code that you write with GLUT can be reused across multiple platforms. However, such code is constrained by a generic set of user interface elements and event-handling options. This document does not show how to use GLUT. The GLUTBasics sample project shows you how to get started with GLUT.

GLX is an OpenGL extension that supports using OpenGL within a window provided by the X Window system. X11 for OS X is available as an optional installation. (It's not shown in Figure 1-6.) See OpenGL Programming for the X Window System, published by Addison Wesley for more information.

This document does not show how to use these libraries. For detailed information, either go to the OpenGL Foundation website http://www.opengl.org or see the most recent version of 'The Red book'—OpenGL Programming Guide, published by Addison Wesley.

Terminology

There are a number of terms that you'll want to understand so that you can write code effectively using OpenGL: renderer, renderer attributes, buffer attributes, pixel format objects, rendering contexts, drawable objects, and virtual screens. As an OpenGL programmer, some of these may seem familiar to you. However, understanding the Apple-specific nuances of these terms will help you get the most out of OpenGL on the Macintosh platform.

Renderer

A renderer is the combination of the hardware and software that OpenGL uses to execute OpenGL commands. The characteristics of the final image depend on the capabilities of the graphics hardware associated with the renderer and the device used to display the image. OS X supports graphics accelerator cards with varying capabilities, as well as a software renderer. It is possible for multiple renderers, each with different capabilities or features, to drive a single set of graphics hardware. To learn how to determine the exact features of a renderer, see Determining the OpenGL Capabilities Supported by the Renderer.

Renderer and Buffer Attributes

Your application uses renderer and buffer attributes to communicate renderer and buffer requirements to OpenGL. The Apple implementation of OpenGL dynamically selects the best renderer for the current rendering task and does so transparently to your application. If your application has very specific rendering requirements and wants to control renderer selection, it can do so by supplying the appropriate renderer attributes. Buffer attributes describe such things as color and depth buffer sizes, and whether the data is stereoscopic or monoscopic.

Renderer and buffer attributes are represented by constants defined in the Apple-specific OpenGL APIs. OpenGL uses the attributes you supply to perform the setup work needed prior to drawing content. Drawing to a Window or View provides a simple example that shows how to use renderer and buffer attributes. Choosing Renderer and Buffer Attributes explains how to choose renderer and buffer attributes to achieve specific rendering goals.

Pixel Format Objects

A pixel format describes the format for pixel data storage in memory. The description includes the number and order of components as well as their names (typically red, blue, green and alpha). It also includes other information, such as whether a pixel contains stencil and depth values. A pixel format object is an opaque data structure that holds a pixel format along with a list of renderers and display devices that satisfy the requirements specified by an application.

Each of the Apple-specific OpenGL APIs defines a pixel format data type and accessor routines that you can use to obtain the information referenced by this object. See Virtual Screens for more information on renderer and display devices.

OpenGL Profiles

OpenGL profiles are new in OS X 10.7. An OpenGL profile is a renderer attribute used to request a specific version of the OpenGL specification. When your application provides an OpenGL profile as part of its renderer attributes, it only receives renderers that provide the complete feature set promised by that profile. The render can implement a different version of the OpenGL so long as the version it supplies to your application provides the same functionality that your application requested.

Rendering Contexts

A rendering context, or simply context, contains OpenGL state information and objects for your application. State variables include such things as drawing color, the viewing and projection transformations, lighting characteristics, and material properties. State variables are set per context. When your application creates OpenGL objects (for example, textures), these are also associated with the rendering context.

Although your application can maintain more than one context, only one context can be the current context in a thread. The current context is the rendering context that receives OpenGL commands issued by your application.

Drawable Objects

A drawable object refers to an object allocated by the windowing system that can serve as an OpenGL framebuffer. A drawable object is the destination for OpenGL drawing operations. The behavior of drawable objects is not part of the OpenGL specification, but is defined by the OS X windowing system.

A drawable object can be any of the following: a Cocoa view, offscreen memory, a full-screen graphics device, or a pixel buffer.

Note: A pixel buffer (pbuffer) is an OpenGL buffer designed for hardware-accelerated offscreen drawing and as a source for texturing. An application can render an image into a pixel buffer and then use the pixel buffer as a texture for other OpenGL commands. Although pixel buffers are supported on Apple's implementation of OpenGL, Apple recommends you use framebuffer objects instead. See Drawing Offscreen for more information on offscreen rendering.

Before OpenGL can draw to a drawable object, the object must be attached to a rendering context. The characteristics of the drawable object narrow the selection of hardware and software specified by the rendering context. Apple's OpenGL automatically allocates buffers, creates surfaces, and specifies which renderer is the current renderer.

The logical flow of data from an application through OpenGL to a drawable object is shown in Figure 1-7. The application issues OpenGL commands that are sent to the current rendering context. The current context, which contains state information, constrains how the commands are interpreted by the appropriate renderer. The renderer converts the OpenGL primitives to an image in the framebuffer. (See also Running an OpenGL Program in OS X .)

Virtual Screens

The characteristics and quality of the OpenGL content that the user sees depend on both the renderer and the physical display used to view the content. The combination of renderer and physical display is called a virtual screen. This important concept has implications for any OpenGL application running on OS X.

A simple system, with one graphics card and one physical display, typically has two virtual screens. One virtual screen consists of a hardware-based renderer and the physical display and the other virtual screen consists of a software-based renderer and the physical display. OS X provides a software-based renderer as a fallback. It's possible for your application to decline the use of this fallback. You'll see how in Choosing Renderer and Buffer Attributes.

The green rectangle around the OpenGL image in Figure 1-8 surrounds a virtual screen for a system with one graphics card and one display. Note that a virtual screen is not the physical display, which is why the green rectangle is drawn around the application window that shows the OpenGL content. In this case, it is the renderer provided by the graphics card combined with the characteristics of the display.

Because a virtual screen is not simply the physical display, a system with one display can use more than one virtual screen at a time, as shown in Figure 1-9. The green rectangles are drawn to point out each virtual screen. Imagine that the virtual screen on the right side uses a software-only renderer and that the one on the left uses a hardware-dependent renderer. Although this is a contrived example, it illustrates the point.

It's also possible to have a virtual screen that can represent more than one physical display. The green rectangle in Figure 1-10 is drawn around a virtual screen that spans two physical displays. In this case, the same graphics hardware drives a pair of identical displays. A mirrored display also has a single virtual screen associated with multiple physical displays.

The concept of a virtual screen is particularly important when the user drags an image from one physical screen to another. When this happens, the virtual screen may change, and with it, a number of attributes of the imaging process, such as the current renderer, may change. With the dual-headed graphics card shown in Figure 1-10, dragging between displays preserves the same virtual screen. However, Figure 1-11 shows the case for which two displays represent two unique virtual screens. Not only are the two graphics cards different, but it's possible that the renderer, buffer attributes, and pixel characteristics are different. A change in any of these three items can result in a change in the virtual screen.

When the user drags an image from one display to another, and the virtual screen is the same for both displays, the image quality should appear similar. However, for the case shown in Figure 1-11, the image quality can be quite different.

OpenGL for OS X transparently manages rendering across multiple monitors. A user can drag a window from one monitor to another, even though their display capabilities may be different or they may be driven by dissimilar graphics cards with dissimilar resolutions and color depths.

OpenGL dynamically switches renderers when the virtual screen that contains the majority of the pixels in an OpenGL window changes. When a window is split between multiple virtual screens, the framebuffer is rasterized entirely by the renderer driving the screen that contains the largest segment of the window. The regions of the window on the other virtual screens are drawn by copying the rasterized image. When the entire OpenGL drawable object is displayed on one virtual screen, there is no performance impact from multiple monitor support.

Applications need to track virtual screen changes and, if appropriate, update the current application state to reflect changes in renderer capabilities. See Working with Rendering Contexts.

Offline Renderer

An offline renderer is one that is not currently associated with a display. For example, a graphics processor might be powered down to conserve power, or there might not be a display hooked up to the graphics card. Offline renderers are not normally visible to your application, but your application can enable them by adding the appropriate renderer attribute. Taking advantage of offline renderers is useful because it gives the user a seamless experience when they plug in or remove displays.

For more information about configuring a context to see offline renderers, see Choosing Renderer and Buffer Attributes. To enable your application to switch to a renderer when a display is attached, see Update the Rendering Context When the Renderer or Geometry Changes.

Running an OpenGL Program in OS X

Figure 1-12 shows the flow of data in an OpenGL program, regardless of the platform that the program runs on.

Per-vertex operations include such things as applying transformation matrices to add perspective or to clip, and applying lighting effects. Per-pixel operations include such things as color conversion and applying blur and distortion effects. Pixels destined for textures are sent to texture assembly, where OpenGL stores textures until it needs to apply them onto an object.

OpenGL rasterizes the processed vertex and pixel data, meaning that the data are converged to create fragments. A fragment encapsulates all the values for a pixel, including color, depth, and sometimes texture values. These values are used during antialiasing and any other calculations needed to fill shapes and to connect vertices.

Per-fragment operations include applying environment effects, depth and stencil testing, and performing other operations such as blending and dithering. Some operations—such as hidden-surface removal—end the processing of a fragment. OpenGL draws fully processed fragments into the appropriate location in the framebuffer.

The dashed arrows in Figure 1-12 indicate reading pixel data back from the framebuffer. They represent operations performed by OpenGL functions such as glReadPixels, glCopyPixels, and glCopyTexImage2D.

So far you've seen how OpenGL operates on any platform. But how do Cocoa applications provide data to the OpenGL for processing? A Mac application must perform these tasks:

Set up a list of buffer and renderer attributes that define the sort of drawing you want to perform. (See Renderer and Buffer Attributes.)

Request the system to create a pixel format object that contains a pixel format that meets the constraints of the buffer and render attributes and a list of all suitable combinations of displays and renderers. (See Pixel Format Objects and Virtual Screens.)

Create a rendering context to hold state information that controls such things as drawing color, view and projection matrices, characteristics of light, and conventions used to pack pixels. When you set up this context, you must provide a pixel format object because the rendering context needs to know the set of virtual screens that can be used for drawing. (See Rendering Contexts.)

Bind a drawable object to the rendering context. The drawable object is what captures the OpenGL drawing sent to that rendering context. (See Drawable Objects.)

Make the rendering context the current context. OpenGL automatically targets the current context. Although your application might have several rendering contexts set up, only the current one is the active one for drawing purposes.

Issue OpenGL drawing commands.

Flush the contents of the rendering context. This causes previously submitted commands to be rendered to the drawable object and displays them to the user.

The tasks described in the first five bullet items are platform-specific. Drawing to a Window or View provides simple examples of how to perform them. As you read other parts of this document, you'll see there are a number of other tasks that, although not mandatory for drawing, are really quite necessary for any application that wants to use OpenGL to perform complex 3D drawing efficiently on a wide variety of Macintosh systems.

How To Install Glm For Mac Windows 7

The flag -lglfw is for GLFW, -lvulkan links with the Vulkan function loader and the remaining flags are low-level system libraries that GLFW needs. The remaining flags are dependencies of GLFW itself: the threading and window management.

Specifying the rule to compile VulkanTest is straightforward now. Make sure touse tabs for indentation instead of spaces.

Verify that this rule works by saving the makefile and running make in thedirectory with main.cpp and Makefile. This should result in a VulkanTestexecutable.

We'll now define two more rules, test and clean, where the former willrun the executable and the latter will remove a built executable:

Running make test should show the program running successfully, and displaying the number of Vulkan extensions. The application should exit with the success return code (0) when you close the empty window. You should now have a complete makefile that resembles the following:

You can now use this directory as a template for your Vulkan projects. Make a copy, rename it to something like HelloTriangle and remove all of the code in main.cpp.

You are now all set for the real adventure.

MacOS

These instructions will assume you are using Xcode and the Homebrew package manager. Also, keep in mind that you will need at least MacOS version 10.11, and your device needs to support the Metal API.

Vulkan SDK

The most important component you'll need for developing Vulkan applications is the SDK. It includes the headers, standard validation layers, debugging tools and a loader for the Vulkan functions. The loader looks up the functions in the driver at runtime, similarly to GLEW for OpenGL - if you're familiar with that.

The SDK can be downloaded from the LunarG website using the buttons at the bottom of the page. You don't have to create an account, but it will give you access to some additional documentation that may be useful to you.

The SDK version for MacOS internally uses MoltenVK. There is no native support for Vulkan on MacOS, so what MoltenVK does is actually act as a layer that translates Vulkan API calls to Apple's Metal graphics framework. With this you can take advantage of debugging and performance benefits of Apple's Metal framework.

After downloading it, simply extract the contents to a folder of your choice (keep in mind you will need to reference it when creating your projects on Xcode). Inside the extracted folder, in the Applications folder you should have some executable files that will run a few demos using the SDK. Run the vkcube executable and you will see the following:

GLFW

As mentioned before, Vulkan by itself is a platform agnostic API and does not include tools for creation a window to display the rendered results. We'll use the GLFW library to create a window, which supports Windows, Linux and MacOS. There are other libraries available for this purpose, like SDL, but the advantage of GLFW is that it also abstracts away some of the other platform-specific things in Vulkan besides just window creation.

To install GLFW on MacOS we will use the Homebrew package manager to get the glfw package:

GLM

Vulkan does not include a library for linear algebra operations, so we'll have to download one. GLM is a nice library that is designed for use with graphics APIs and is also commonly used with OpenGL.

It is a header-only library that can be installed from the glm package:

Setting up Xcode

Now that all the dependencies are installed we can set up a basic Xcode project for Vulkan. Most of the instructions here are essentially a lot of 'plumbing' so we can get all the dependencies linked to the project. Also, keep in mind that during the following instructions whenever we mention the folder vulkansdk we are refering to the folder where you extracted the Vulkan SDK.

Start Xcode and create a new Xcode project. On the window that will open select Application > Command Line Tool.

Select Next, write a name for the project and for Language select C++.

Press Next and the project should have been created. Now, let's change the code in the generated main.cpp file to the following code:

Keep in mind you are not required to understand all this code is doing yet, we are just setting up some API calls to make sure everything is working.

Xcode should already be showing some errors such as libraries it cannot find. We will now start configuring the project to get rid of those errors. On the Project Navigator panel select your project. Open the Build Settings tab and then:

- Find the Header Search Paths field and add a link to

/usr/local/include(this is where Homebrew installs headers, so the glm and glfw3 header files should be there) and a link tovulkansdk/macOS/includefor the Vulkan headers. - Find the Library Search Paths field and add a link to

/usr/local/lib(again, this is where Homebrew installs libraries, so the glm and glfw3 lib files should be there) and a link tovulkansdk/macOS/lib.

It should look like so (obviously, paths will be different depending on where you placed on your files):

Now, in the Build Phases tab, on Link Binary With Libraries we will add both the glfw3 and the vulkan frameworks. To make things easier we will be adding the dynamic libraries in the project (you can check the documentation of these libraries if you want to use the static frameworks).

- For glfw open the folder

/usr/local/liband there you will find a file name likelibglfw.3.x.dylib('x' is the library's version number, it might be different depending on when you downloaded the package from Homebrew). Simply drag that file to the Linked Frameworks and Libraries tab on Xcode. - For vulkan, go to

vulkansdk/macOS/lib. Do the same for the file both fileslibvulkan.1.dylibandlibvulkan.1.x.xx.dylib(where 'x' will be the version number of the the SDK you downloaded).

After adding those libraries, in the same tab on Copy Files change Destination to 'Frameworks', clear the subpath and deselect 'Copy only when installing'. Click on the '+' sign and add all those three frameworks here aswell.

Your Xcode configuration should look like:

The last thing you need to setup are a couple of environment variables. On Xcode toolbar go to Product > Scheme > Edit Scheme.., and in the Arguments tab add the two following environment variables:

- VK_ICD_FILENAMES =

vulkansdk/macOS/share/vulkan/icd.d/MoltenVK_icd.json - VK_LAYER_PATH =

vulkansdk/macOS/share/vulkan/explicit_layer.d

It should look like so:

Finally, you should be all set! Now if you run the project (remembering to setting the build configuration to Debug or Release depending on the configuration you chose) you should see the following:

The number of extensions should be non-zero. The other logs are from the libraries, you might get different messages from those depending on your configuration.

You are now all set for the real thing.

Please enable JavaScript to view the comments powered by Disqus.Important:OpenGL was deprecated in macOS 10.14. To create high-performance code on GPUs, use the Metal framework instead. See Metal.

You can tell that Apple has an implementation of OpenGL on its platform by looking at the user interface for many of the applications that are installed with OS X. The reflections built into iChat (Figure 1-1) provide one of the more notable examples. The responsiveness of the windows, the instant results of applying an effect in iPhoto, and many other operations in OS X are due to the use of OpenGL. OpenGL is available to all Macintosh applications.

OpenGL for OS X is implemented as a set of frameworks that contain the OpenGL runtime engine and its drawing software. These frameworks use platform-neutral virtual resources to free your programming as much as possible from the underlying graphics hardware. OS X provides a set of application programming interfaces (APIs) that Cocoa applications can use to support OpenGL drawing.

This chapter provides an overview of OpenGL and the interfaces your application uses on the Mac platform to tap into it.

OpenGL Concepts

To understand how OpenGL fits into OS X and your application, you should first understand how OpenGL is designed.

OpenGL Implements a Client-Server Model

OpenGL uses a client-server model, as shown in Figure 1-2. When your application calls an OpenGL function, it talks to an OpenGL client. The client delivers drawing commands to an OpenGL server. The nature of the client, the server, and the communication path between them is specific to each implementation of OpenGL. For example, the server and clients could be on different computers, or they could be different processes on the same computer.

A client-server model allows the graphics workload to be divided between the client and the server. For example, all Macintosh computers ship with dedicated graphics hardware that is optimized to perform graphics calculations in parallel. Figure 1-3 shows a common arrangement of CPUs and GPUs. With this hardware configuration, the OpenGL client executes on the CPU and the server executes on the GPU.

OpenGL Commands Can Be Executed Asynchronously

Mac How To Install Glm

A benefit of the OpenGL client-server model is that the client can return control to the application before the command has finished executing. An OpenGL client may also buffer or delay execution of OpenGL commands. If OpenGL required all commands to complete before returning control to the application, then either the CPU or the GPU would be idle waiting for the other to provide it data, resulting in reduced performance.

Some OpenGL commands implicitly or explicitly require the client to wait until some or all previously submitted commands have completed. OpenGL applications should be designed to reduce the frequency of client-server synchronizations. See OpenGL Application Design Strategies for more information on how to design your OpenGL application.

OpenGL Commands Are Executed In Order

OpenGL guarantees that commands are executed in the order they are received by OpenGL.

OpenGL Copies Client Data at Call-Time

When an application calls an OpenGL function, the OpenGL client copies any data provided in the parameters before returning control to the application. For example, if a parameter points at an array of vertex data stored in application memory, OpenGL must copy that data before returning. Therefore, an application is free to change memory it owns regardless of calls it makes to OpenGL.

The data that the client copies is often reformatted before it is transmitted to the server. Copying, modifying, and transmitting parameters to the server adds overhead to calling OpenGL. Applications should be designed to minimize copy overhead.

OpenGL Relies on Platform-Specific Libraries For Critical Functionality

OpenGL provides a rich set of cross-platform drawing commands, but does not define functions to interact with an operating system's graphics subsystem. Instead, OpenGL expects each implementation to define an interface to create rendering contexts and associate them with the graphics subsystem. A rendering context holds all of the data stored in the OpenGL state machine. Allowing multiple contexts allows the state in one machine to be changed by an application without affecting other contexts.

Associating OpenGL with the graphic subsystem usually means allowing OpenGL content to be rendered to a specific window. When content is associated with a window, the implementation creates whatever resources are required to allow OpenGL to render and display images.

OpenGL in OS X

OpenGL in OS X implements the OpenGL client-server model using a common OpenGL framework and plug-in drivers. The framework and driver combine to implement the client portion of OpenGL, as shown in Figure 1-4. Dedicated graphics hardware provides the server. Although this is the common scenario, Apple also provides a software renderer implemented entirely on the CPU.

OS X supports a display space that can include multiple dissimilar displays, each driven by different graphics cards with different capabilities. In addition, multiple OpenGL renderers can drive each graphics card. To accommodate this versatility, OpenGL for OS X is segmented into well-defined layers: a window system layer, a framework layer, and a driver layer, as shown in Figure 1-5. This segmentation allows for plug-in interfaces to both the window system layer and the framework layer. Plug-in interfaces offer flexibility in software and hardware configuration without violating the OpenGL standard.

The window system layer is an OS X–specific layer that your application uses to create OpenGL rendering contexts and associate them with the OS X windowing system. The NSOpenGL classes and Core OpenGL (CGL) API also provide some additional controls for how OpenGL operates on that context. See OpenGL APIs Specific to OS X for more information. Finally, this layer also includes the OpenGL libraries—GL, GLU, and GLUT. (See Apple-Implemented OpenGL Libraries for details.)

The common OpenGL framework layer is the software interface to the graphics hardware. This layer contains Apple's implementation of the OpenGL specification.

The driver layer contains the optional GLD plug-in interface and one or more GLD plug-in drivers, which may have different software and hardware support capabilities. The GLD plug-in interface supports third-party plug-in drivers, allowing third-party hardware vendors to provide drivers optimized to take best advantage of their graphics hardware.

Accessing OpenGL Within Your Application

The programming interfaces that your application calls fall into two categories—those specific to the Macintosh platform and those defined by the OpenGL Working Group. The Apple-specific programming interfaces are what Cocoa applications use to communicate with the OS X windowing system. These APIs don't create OpenGL content, they manage content, direct it to a drawing destination, and control various aspects of the rendering operation. Your application calls the OpenGL APIs to create content. OpenGL routines accept vertex, pixel, and texture data and assemble the data to create an image. The final image resides in a framebuffer, which is presented to the user through the windowing-system specific API.

OpenGL APIs Specific to OS X

OS X offers two easy-to-use APIs that are specific to the Macintosh platform: the NSOpenGL classes and the CGL API. Throughout this document, these APIs are referred to as the Apple-specific OpenGL APIs.

Cocoa provides many classes specifically for OpenGL:

The

NSOpenGLContextclass implements a standard OpenGL rendering context.The

NSOpenGLPixelFormatclass is used by an application to specify the parameters used to create the OpenGL context.The

NSOpenGLViewclass is a subclass ofNSViewthat usesNSOpenGLContextandNSOpenGLPixelFormatto display OpenGL content in a view. Applications that subclassNSOpenGLViewdo not need to directly subclassNSOpenGLPixelFormatorNSOpenGLContext. Applications that need customization or flexibility, can subclassNSViewand createNSOpenGLPixelFormatandNSOpenGLContextobjects manually.The

NSOpenGLLayerclass allows your application to integrate OpenGL drawing with Core Animation.The

NSOpenGLPixelBufferclass provides hardware-accelerated offscreen drawing.

The Core OpenGL API (CGL) resides in the OpenGL framework and is used to implement the NSOpenGL classes. CGL offers the most direct access to system functionality and provides the highest level of graphics performance and control for drawing to the full screen. CGL Reference provides a complete description of this API.

Apple-Implemented OpenGL Libraries

OS X also provides the full suite of graphics libraries that are part of every implementation of OpenGL: GL, GLU, GLUT, and GLX. Two of these—GL and GLU—provide low-level drawing support. The other two—GLUT and GLX—support drawing to the screen.

Your application typically interfaces directly with the core OpenGL library (GL), the OpenGL Utility library (GLU), and the OpenGL Utility Toolkit (GLUT). The GL library provides a low-level modular API that allows you to define graphical objects. It supports the core functions defined by the OpenGL specification. It provides support for two fundamental types of graphics primitives: objects defined by sets of vertices, such as line segments and simple polygons, and objects that are pixel-based images, such as filled rectangles and bitmaps. The GL API does not handle complex custom graphical objects; your application must decompose them into simpler geometries.

The GLU library combines functions from the GL library to support more advanced graphics features. It runs on all conforming implementations of OpenGL. GLU is capable of creating and handling complex polygons (including quartic equations), processing nonuniform rational b-spline curves (NURBs), scaling images, and decomposing a surface to a series of polygons (tessellation).

The GLUT library provides a cross-platform API for performing operations associated with the user windowing environment—displaying and redrawing content, handling events, and so on. It is implemented on most UNIX, Linux, and Windows platforms. Code that you write with GLUT can be reused across multiple platforms. However, such code is constrained by a generic set of user interface elements and event-handling options. This document does not show how to use GLUT. The GLUTBasics sample project shows you how to get started with GLUT.

GLX is an OpenGL extension that supports using OpenGL within a window provided by the X Window system. X11 for OS X is available as an optional installation. (It's not shown in Figure 1-6.) See OpenGL Programming for the X Window System, published by Addison Wesley for more information.

This document does not show how to use these libraries. For detailed information, either go to the OpenGL Foundation website http://www.opengl.org or see the most recent version of 'The Red book'—OpenGL Programming Guide, published by Addison Wesley.

Terminology

There are a number of terms that you'll want to understand so that you can write code effectively using OpenGL: renderer, renderer attributes, buffer attributes, pixel format objects, rendering contexts, drawable objects, and virtual screens. As an OpenGL programmer, some of these may seem familiar to you. However, understanding the Apple-specific nuances of these terms will help you get the most out of OpenGL on the Macintosh platform.

Renderer

A renderer is the combination of the hardware and software that OpenGL uses to execute OpenGL commands. The characteristics of the final image depend on the capabilities of the graphics hardware associated with the renderer and the device used to display the image. OS X supports graphics accelerator cards with varying capabilities, as well as a software renderer. It is possible for multiple renderers, each with different capabilities or features, to drive a single set of graphics hardware. To learn how to determine the exact features of a renderer, see Determining the OpenGL Capabilities Supported by the Renderer.

Renderer and Buffer Attributes

Your application uses renderer and buffer attributes to communicate renderer and buffer requirements to OpenGL. The Apple implementation of OpenGL dynamically selects the best renderer for the current rendering task and does so transparently to your application. If your application has very specific rendering requirements and wants to control renderer selection, it can do so by supplying the appropriate renderer attributes. Buffer attributes describe such things as color and depth buffer sizes, and whether the data is stereoscopic or monoscopic.

Renderer and buffer attributes are represented by constants defined in the Apple-specific OpenGL APIs. OpenGL uses the attributes you supply to perform the setup work needed prior to drawing content. Drawing to a Window or View provides a simple example that shows how to use renderer and buffer attributes. Choosing Renderer and Buffer Attributes explains how to choose renderer and buffer attributes to achieve specific rendering goals.

Pixel Format Objects

A pixel format describes the format for pixel data storage in memory. The description includes the number and order of components as well as their names (typically red, blue, green and alpha). It also includes other information, such as whether a pixel contains stencil and depth values. A pixel format object is an opaque data structure that holds a pixel format along with a list of renderers and display devices that satisfy the requirements specified by an application.

Each of the Apple-specific OpenGL APIs defines a pixel format data type and accessor routines that you can use to obtain the information referenced by this object. See Virtual Screens for more information on renderer and display devices.

OpenGL Profiles

OpenGL profiles are new in OS X 10.7. An OpenGL profile is a renderer attribute used to request a specific version of the OpenGL specification. When your application provides an OpenGL profile as part of its renderer attributes, it only receives renderers that provide the complete feature set promised by that profile. The render can implement a different version of the OpenGL so long as the version it supplies to your application provides the same functionality that your application requested.

Rendering Contexts

A rendering context, or simply context, contains OpenGL state information and objects for your application. State variables include such things as drawing color, the viewing and projection transformations, lighting characteristics, and material properties. State variables are set per context. When your application creates OpenGL objects (for example, textures), these are also associated with the rendering context.

Although your application can maintain more than one context, only one context can be the current context in a thread. The current context is the rendering context that receives OpenGL commands issued by your application.

Drawable Objects

A drawable object refers to an object allocated by the windowing system that can serve as an OpenGL framebuffer. A drawable object is the destination for OpenGL drawing operations. The behavior of drawable objects is not part of the OpenGL specification, but is defined by the OS X windowing system.

A drawable object can be any of the following: a Cocoa view, offscreen memory, a full-screen graphics device, or a pixel buffer.

Note: A pixel buffer (pbuffer) is an OpenGL buffer designed for hardware-accelerated offscreen drawing and as a source for texturing. An application can render an image into a pixel buffer and then use the pixel buffer as a texture for other OpenGL commands. Although pixel buffers are supported on Apple's implementation of OpenGL, Apple recommends you use framebuffer objects instead. See Drawing Offscreen for more information on offscreen rendering.

Before OpenGL can draw to a drawable object, the object must be attached to a rendering context. The characteristics of the drawable object narrow the selection of hardware and software specified by the rendering context. Apple's OpenGL automatically allocates buffers, creates surfaces, and specifies which renderer is the current renderer.

The logical flow of data from an application through OpenGL to a drawable object is shown in Figure 1-7. The application issues OpenGL commands that are sent to the current rendering context. The current context, which contains state information, constrains how the commands are interpreted by the appropriate renderer. The renderer converts the OpenGL primitives to an image in the framebuffer. (See also Running an OpenGL Program in OS X .)

Virtual Screens

The characteristics and quality of the OpenGL content that the user sees depend on both the renderer and the physical display used to view the content. The combination of renderer and physical display is called a virtual screen. This important concept has implications for any OpenGL application running on OS X.

A simple system, with one graphics card and one physical display, typically has two virtual screens. One virtual screen consists of a hardware-based renderer and the physical display and the other virtual screen consists of a software-based renderer and the physical display. OS X provides a software-based renderer as a fallback. It's possible for your application to decline the use of this fallback. You'll see how in Choosing Renderer and Buffer Attributes.

The green rectangle around the OpenGL image in Figure 1-8 surrounds a virtual screen for a system with one graphics card and one display. Note that a virtual screen is not the physical display, which is why the green rectangle is drawn around the application window that shows the OpenGL content. In this case, it is the renderer provided by the graphics card combined with the characteristics of the display.

Because a virtual screen is not simply the physical display, a system with one display can use more than one virtual screen at a time, as shown in Figure 1-9. The green rectangles are drawn to point out each virtual screen. Imagine that the virtual screen on the right side uses a software-only renderer and that the one on the left uses a hardware-dependent renderer. Although this is a contrived example, it illustrates the point.

It's also possible to have a virtual screen that can represent more than one physical display. The green rectangle in Figure 1-10 is drawn around a virtual screen that spans two physical displays. In this case, the same graphics hardware drives a pair of identical displays. A mirrored display also has a single virtual screen associated with multiple physical displays.

The concept of a virtual screen is particularly important when the user drags an image from one physical screen to another. When this happens, the virtual screen may change, and with it, a number of attributes of the imaging process, such as the current renderer, may change. With the dual-headed graphics card shown in Figure 1-10, dragging between displays preserves the same virtual screen. However, Figure 1-11 shows the case for which two displays represent two unique virtual screens. Not only are the two graphics cards different, but it's possible that the renderer, buffer attributes, and pixel characteristics are different. A change in any of these three items can result in a change in the virtual screen.

When the user drags an image from one display to another, and the virtual screen is the same for both displays, the image quality should appear similar. However, for the case shown in Figure 1-11, the image quality can be quite different.

OpenGL for OS X transparently manages rendering across multiple monitors. A user can drag a window from one monitor to another, even though their display capabilities may be different or they may be driven by dissimilar graphics cards with dissimilar resolutions and color depths.

OpenGL dynamically switches renderers when the virtual screen that contains the majority of the pixels in an OpenGL window changes. When a window is split between multiple virtual screens, the framebuffer is rasterized entirely by the renderer driving the screen that contains the largest segment of the window. The regions of the window on the other virtual screens are drawn by copying the rasterized image. When the entire OpenGL drawable object is displayed on one virtual screen, there is no performance impact from multiple monitor support.

Applications need to track virtual screen changes and, if appropriate, update the current application state to reflect changes in renderer capabilities. See Working with Rendering Contexts.

Offline Renderer

An offline renderer is one that is not currently associated with a display. For example, a graphics processor might be powered down to conserve power, or there might not be a display hooked up to the graphics card. Offline renderers are not normally visible to your application, but your application can enable them by adding the appropriate renderer attribute. Taking advantage of offline renderers is useful because it gives the user a seamless experience when they plug in or remove displays.

For more information about configuring a context to see offline renderers, see Choosing Renderer and Buffer Attributes. To enable your application to switch to a renderer when a display is attached, see Update the Rendering Context When the Renderer or Geometry Changes.

Running an OpenGL Program in OS X

Figure 1-12 shows the flow of data in an OpenGL program, regardless of the platform that the program runs on.

Per-vertex operations include such things as applying transformation matrices to add perspective or to clip, and applying lighting effects. Per-pixel operations include such things as color conversion and applying blur and distortion effects. Pixels destined for textures are sent to texture assembly, where OpenGL stores textures until it needs to apply them onto an object.

OpenGL rasterizes the processed vertex and pixel data, meaning that the data are converged to create fragments. A fragment encapsulates all the values for a pixel, including color, depth, and sometimes texture values. These values are used during antialiasing and any other calculations needed to fill shapes and to connect vertices.

Per-fragment operations include applying environment effects, depth and stencil testing, and performing other operations such as blending and dithering. Some operations—such as hidden-surface removal—end the processing of a fragment. OpenGL draws fully processed fragments into the appropriate location in the framebuffer.

The dashed arrows in Figure 1-12 indicate reading pixel data back from the framebuffer. They represent operations performed by OpenGL functions such as glReadPixels, glCopyPixels, and glCopyTexImage2D.

So far you've seen how OpenGL operates on any platform. But how do Cocoa applications provide data to the OpenGL for processing? A Mac application must perform these tasks:

Set up a list of buffer and renderer attributes that define the sort of drawing you want to perform. (See Renderer and Buffer Attributes.)

Request the system to create a pixel format object that contains a pixel format that meets the constraints of the buffer and render attributes and a list of all suitable combinations of displays and renderers. (See Pixel Format Objects and Virtual Screens.)

Create a rendering context to hold state information that controls such things as drawing color, view and projection matrices, characteristics of light, and conventions used to pack pixels. When you set up this context, you must provide a pixel format object because the rendering context needs to know the set of virtual screens that can be used for drawing. (See Rendering Contexts.)

Bind a drawable object to the rendering context. The drawable object is what captures the OpenGL drawing sent to that rendering context. (See Drawable Objects.)

Make the rendering context the current context. OpenGL automatically targets the current context. Although your application might have several rendering contexts set up, only the current one is the active one for drawing purposes.

Issue OpenGL drawing commands.

Flush the contents of the rendering context. This causes previously submitted commands to be rendered to the drawable object and displays them to the user.

The tasks described in the first five bullet items are platform-specific. Drawing to a Window or View provides simple examples of how to perform them. As you read other parts of this document, you'll see there are a number of other tasks that, although not mandatory for drawing, are really quite necessary for any application that wants to use OpenGL to perform complex 3D drawing efficiently on a wide variety of Macintosh systems.

Making Great OpenGL Applications on the Macintosh

OpenGL lets you create applications with outstanding graphics performance as well as a great user experience—but neither of these things come for free. Your application performs best when it works with OpenGL rather than against it. With that in mind, here are guidelines you should follow to create high-performance, future-looking OpenGL applications:

Ensure your application runs successfully with offline renderers and multiple graphics cards.

Apple ships many sophisticated hardware configurations. Your application should handle renderer changes seamlessly. You should test your application on a Mac with multiple graphics processors and include tests for attaching and removing displays. For more information on how to implement hot plugging correctly, see Working with Rendering Contexts

Avoid finishing and flushing operations.

Pay particular attention to OpenGL functions that force previously submitted commands to complete. Synchronizing the graphics hardware to the CPU may result in dramatically lower performance. Performance is covered in detail in OpenGL Application Design Strategies.

Use multithreading to improve the performance of your OpenGL application.

Many Macs support multiple simultaneous threads of execution. Your application should take advantage of concurrency. Well-behaved applications can take advantage of concurrency in just a few line of code. See Concurrency and OpenGL.

Use buffer objects to manage your data.

Vertex buffer objects (VBOs) allow OpenGL to manage your application's vertex data. Using vertex buffer objects gives OpenGL more opportunities to cache vertex data in a format that is friendly to the graphics hardware, improving application performance. For more information see Best Practices for Working with Vertex Data.

Similarly, pixel buffer objects (PBOs) should be used to manage your image data. See Best Practices for Working with Texture Data Share a group calendar in outlook mac.

Use framebuffer objects (FBOs) when you need to render to offscreen memory.

Framebuffer objects allow your application to create offscreen rendering targets without many of the limitations of platform-dependent interfaces. See Rendering to a Framebuffer Object.

Generate objects before binding them.